The number and diversity of Ebury’s backend services allow us to try and experiment with different tools that help us in our day-to-day jobs.

A couple of months ago we managed to introduce a new tool to strengthen our tests in one of our services. This service listens to an AWS SQS queue which maps the data every time a new message comes in order to call a third-party SOAP API, obtains some additional information that is interpreted, and sends it back via AWS SNS. The system uses Django, and the inputs and outputs are stored in a Postgres database.

Our service’s testing suite contains unit tests, integration tests, and system tests. When it comes to system and integration tests, one of the evaluated options was to mock external dependencies inside the code. Still, we decided against it because it didn’t provide us with enough confidence that the integration between components would work as expected in the real environment. Of course, we had the option to compensate with manual testing, but that comes with its own set of downsides: it’s less scalable, prone to human error, time-consuming and not reproducible.

Therefore, the decision was to simulate the external dependencies as part of the testing infrastructure.

Following the testing pyramid, we added a lot of unit tests to validate the business logic, fewer integration tests, and a few system tests that prove everything is glued together. Testing the integration with AWS was done using localstack, for the database we used the Django test database and for the SOAP API we used Mountebank.

Mountebank to the rescue

Mountebank is an open-source tool that allows developers to start a web server that will mimic the actual API, allowing the code under test to perform real requests over the network (a.k.a. service virtualization). Mountebank can impersonate one or more APIs, each of them defining an equivalent Imposter, with an associated name and port. For each imposter, we provide a definition on how Mountebank will reply (via predefined answers called stubs) every time certain conditions are matched in the requests (predicates).

Let’s explore an example setup of Mountebank, started via docker-compose and impersonating a fictive API:

docker-compose.yml

mountebank: image: andyrbell/mountebank:latest ports: - "2525:2525" # Mountebank’s UI - "4545:4545" # Imposter APIs listening port volumes: - ./imposters:/imposters entrypoint: ["mb"] command: ["start", "--port", "2525", "--configfile", "/imposters/imposters.ejs", "--loglevel", "warn", "--nologfile"]

imposters/imposters.ejs – config file to define imposters and point to their definitions

{

"imposters": [

<% include http_api_definition_4545.json %>,

]

}

imposters/http_api_definition_4545.json – imposter definition containing stubs and predicates

{

"port": 4545,

"protocol": "http",

"recordRequests": true,

"stubs": [

{

"predicates": [

{

"equals": {

"method": "POST",

"path": "/customers/123"

}

}

],

"responses": [

{

"is": {

"statusCode": 201,

"headers": {

"Location": "http://localhost:4545/customers/123",

"Content-Type": "application/xml"

},

"body": "<customer><email>[email protected]</email></customer>"

}

},

{

"is": {

"statusCode": 400,

"headers": {

"Content-Type": "application/xml"

},

"body": "<error>email already exists</error>"

}

}

]

},

{

"responses": [

{

"is": { "statusCode": 404 }

}

]

}

]

}

In the example above the imposters and their definitions are stored upfront and loaded when Mountebank starts, allowing us to locally set up the behaviour of the imposted Third Party APIs.

There is also an API that allows interacting with mountebank programmatically: fetching imposters, creating imposters, adding new stubs, etc.

In order to interact with the API, there are many different client libraries in multiple languages – the one we are using is mbtest and we added some pytest fixtures on top.

@pytest.fixture(scope="session")

def mountebank():

server = MountebankServer(host=MOUNTEBANK_HOST, port=MOUNTEBANK_ADMIN_PORT)

server.import_running_imposters()

return server

@pytest.fixture(scope="session")

def imposters(mountebank):

return mountebank.get_running_imposters()

@pytest.fixture(scope="session")

def my_imposter(imposters):

for imposter in imposters:

if imposter.name == MY_IMPOSTER_NAME:

return imposter

Integrating mountebank in our tests

Here is an example on how you can check that a specific request was made.

def test_that_the_request_payload_is_properly_built(

self,

my_client,

my_imposter,

):

my_client.search(query="ebury")

path = "Services.svc/Search"

expected_body = read_xml_body(

os.path.join(

CURRENT_DIRECTORY,

"expected_body.xml",

),

)

assert_that(my_imposter, had_request().with_path(path).and_body(contains_string(expected_body)))

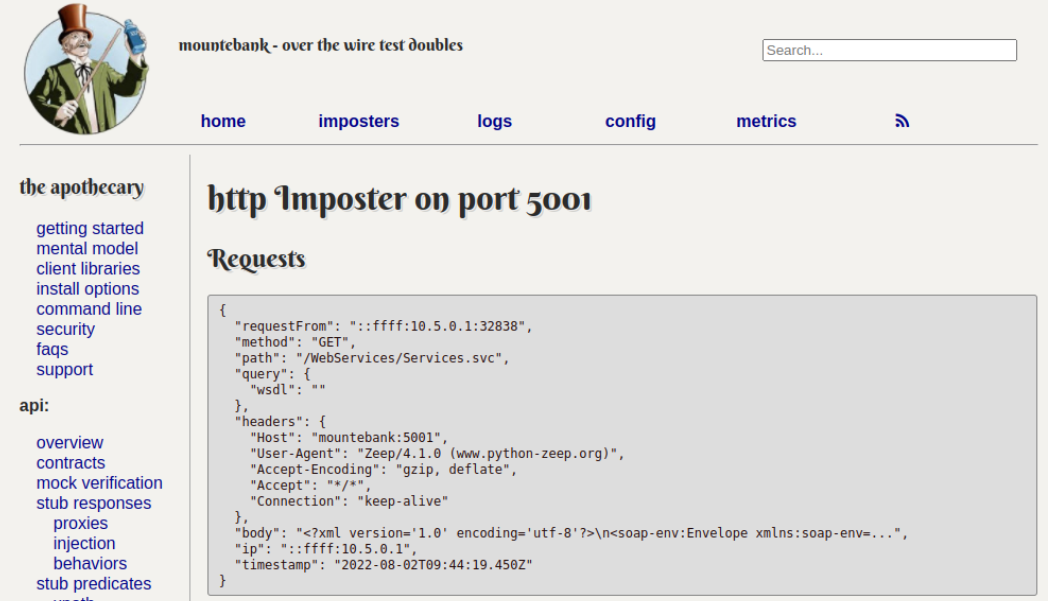

Mountebank also offers a nice web interface (port 2525) where you can see the imposter definitions and access the recorded requests along with the associated responses, which is very useful for debugging.

Besides http it has support for other builtin protocols – tcp, smtp and there are also community plugins to add support for grpc or websockets. If the stubbed responses also need to incorporate some logic based on the request data, this can be achieved by writing custom JavaScript code and starting the server with the –allowInjection flag – security considerations have to be taken into account. Another powerful feature is being able to proxy to a real API and record the requests and responses by generating the corresponding predicates and stubs.

Circling back to our requested SOAP API, we created a thin layer with well-defined inputs and outputs (DTOs) and implemented the API calls using the suds python library. The library simplifies interacting with SOAP apis by reading the WSDL schema and generating some Python objects based on it. We added integration tests that pointed to the Mountebank server to ensure that the correct requests are made and that the XML results are properly parsed. We also added tests for the possible failure scenarios. Later on we refactored the code to use the zeep library which simplified the implementation – instead of using custom python objects plain dictionaries could be used. While doing so we relied fully on the existing integration tests without changing anything in the tests themselves.

Further usages of Mountebank

Besides integration and system tests, Mountebank can prove itself useful in the following scenarios:

- Performance testing – In most of cases creating a production environment duplicate with all of the dependencies deployed is not feasible or cost-effective. Assuming that the weaker link is the system under test, any external APIs can be replaced with Mountebank.

- Contract-driven development – Imagine you are working on integrating a service that another team will develop. Instead of waiting to test the integration once all the implementation is in place, the contract can be defined upfront and shared via a mountebank definition, allowing the work of both teams to be done in parallel. In this model, the team owning the API shares the contract with other teams to facilitate the integration.

- Local development – Mountebank makes it easy to bootstrap the local environment and reproduce a bug or a corner case scenario that can be more time-consuming otherwise.

- Providing a quick reference to the relevant API endpoints used by a project – this is more of a side effect of having integration tests with mountebank – helping in the process of onboarding new developers.

Hopefully, you will find Mountebank suitable for your own services. Let us know your ideas!

Resources

http://www.mbtest.org/docs/mentalModel

https://www.oreilly.com/library/view/testing-microservices-with/9781617294778/