This post is targeting Python developers who are used to unittest, asking themselves why they should try pytest. I’m hoping my experience and explanation will help you to clarify these doubts.

What’s wrong with unittest?

Have you ever wondered, why the look-and-feel of your unittests are so different from your actual Python code?

Well, unittest was developed following JUnit as an example, and indeed average unittests remind you more of Java code than Python.

While overall a very useful library, not being ‘pythonic’ is only one of the many flaws of unittest. I’m sure you have also stumbled on some of the shortcomings.

unittest is not “entirely wrong” or conceptually mistaken… It’s just a “bit too much to type”, missing a number of features, heavyweight and lacking flexibility in a number of ways… It’s not that “it’s all wrong”. We could just imagine better.

pytest was created to address these issues. It wasn’t meant to be the ‘ultimate answer’ to revolutionise testing. But instead to provide a testsuite within the principles and mindset of the Python language, and include all those little things that you always wished unittest already had. pytest allows you to write concise tests that are easy to follow, easy to trace, provides excellent error reporting, and comes with a number of useful features and plug-ins.

And at the end of the day you may find that these little things altogether are revolutionary.

Why do I prefer pytest?

Just to whet your appetite, here’s a shortlist of my favorite pytest features.

- Concise, readable, ‘pythonic’

- “reminds me of Python code”

- Verbose failure reports

- detailed stack traces, variable values, etc.

- Parameterisable tests in a traceable manner

- preventing repetitive code duplication

- Option to ignore or disable a test (to be fair, this also exists in

unittest)- temporary measure typically due to an upcoming bugfix, etc.

Philosophy

Before we would get to the fun part, let me take you through the obstacles that may seem discouraging.

pytestis totally different, I haven’t got the time to learn all of that, I’ve gotunittestthat works well enough. No classes at all, awkward so-called ‘fixtures’ all around, new decorators sometimes with a cryptic syntax… Do I really need this…?

I hear what you’re saying. As for a first glance, pytest looks quite different from what you may have been used to. Yet, honestly, it’s not that mysterious.

Please let me give it a chance to introduce you to this “magic”.

Independence for all!

unittest forces the developer to structure the code in classes as logical units. pytest organization rather works on the level of modules with functions as building blocks. Classes are not even needed anymore.

I like to think about a pytest testsuite running something like this:

No, not ‘just a mess’ 😀 (In case you would find so for my little diagram.)

Instead think of it as a number of independent, asynchronous entities doing their job in a test session space. Sure, this is much different from unittest, where dependencies were (seemingly) strongly, hierarchically bound. Yet, after all… what’s wrong with the concept above?

Of course, in reality your tests won’t be chaotic, and instead of “independent” components, you will rather have loosely coupled elements (we’ll see that soon), together with well-defined standards to share common code across your tests. Regarding further organisation: it’s as much in your hands as ever before.

Fixtures instead of setup/teardown

One thing you must have heard about pytest is the usage of these so-called ‘fixture’s.

So let’s take a look: what are they?

As a disclaimer: I would like to dedicate a slightly longer section to this topic. NOT because pytest would be “all about fixtures”, neither because fixtures would be “the big hammer I found, so now everything looks like a nail”.

The reason is very different. Starting to use fixtures is the key moment when you need to re-structure both your thinking and your code, and fully forget about big, class-based logical units… forget about the way you used to organise your tests before.

And this may not go easy. I personally was struggling to switch mindset, and took a while until I understood the benefits brought by this new type of abstraction. I would like to facilitate your journey, and show that after all: it is not that much of a mystery.

The concept of fixtures, though kind of ignored, was already available in unittest. But it only got real popular after “re-invented” by pytest, and we will see the reason why.

We already know that the “pytest approach” involves small, independent components instead of heavyweight classes. As a consequence, the dedicated setup and teardown functions will make no more sense without their classes.

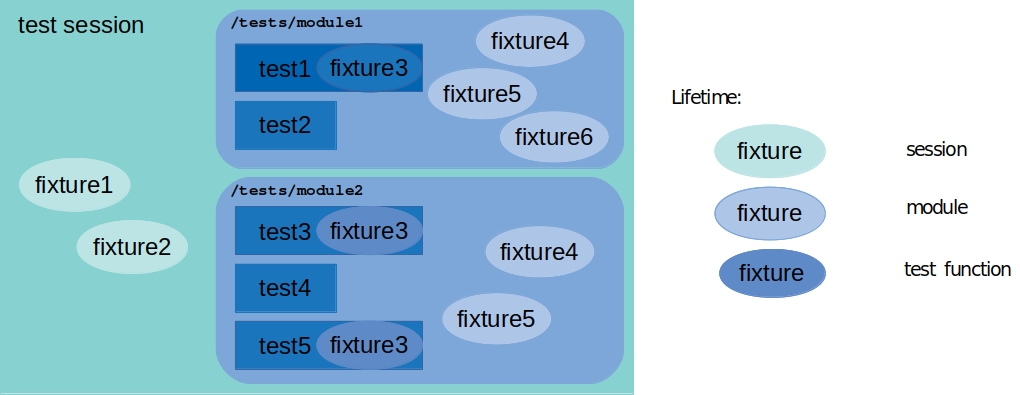

Suited to the pytest philosophy, fixtures provide ‘independent’ helper objects that will co-exist in the shared space with a lifetime of your choice. These objects can be used in arbitrary test functions, each time created and destroyed as needed. (By default before/after test functions, just like objects in a unittest setUp() and tearDown()).

As for the lifetime, it could be: function (default), class, module, package or session.

The way I like to think about the lifetime of fixtures is something like this:

Let me try to illustrate it with imaginary Integration Tests (connecting to an actual database).

Fixtures (tests/conftest.py)

import pytest

# "green" fixture, executed once throughout the test session,

# before executing the first test referring to it

@pytest.fixture(scope=session)

def db_connection():

connection = connect_to_test_db()

add_client(connection, client_id='client1')

add_account(connection, account_id='account1', client_id='client1')

return connection

# "light blue" fixtures, executed once for each module,

# before executing the first test referring to it

@pytest.fixture(scope=module)

def client(db_connection):

return get_client(db_connection, name='client1')

@pytest.fixture(scope=module)

def account(db_connection):

return get_account(db_connection, name='account1')

# "dark blue" fixture, executed once for each function,

# before function execution (exactly as unittest setUp())

@pytest.fixture def headers():

return {'Content-Type': 'application/json',

'X-Contact-ID': 'x-contact-id' }

Tests (tests/test_account.py)

# The 'account' and 'client' fixtures (objects) will only trigger

# one database call at the time of the first usage, and then

# the value will be re-used throughout the whole module

def test_account_to_data(account):

data = account.to_data()

assert data['owner'] is not None

assert 'created_at' in datadef test_account_related_info(account, client):

related_info = account.get_related_info()

assert related_info['client_name'] == client.name

# However the 'headers' fixture will be re-created for each function

def test_api_get_account_200(account, headers):

resp = app.get(f'/account?id={account.account_id}', headers=headers)

assert resp.status_code == 200

assert resp.json['account_name'] == account.name

# We could even happily modify the contents

def test_api_get_account_bad_header(account):

headers['X-Contact-ID'] = ''

resp = app.get(f'/account?account_id={account.account_id}')

assert resp.status_code == 400

assert resp.json['details'] == 'x_contact_id: minimum length 1'

As you see in the example, fixtures are passed to test functions as parameters. However they don’t behave as functions anymore. Instead, they are replaced with the objects that the original fixture function returned.

In general, don’t think about fixtures as ‘functions’. Think of them as ‘objects’. (The return value of the fixture definition function).

You can define fixtures inside of test modules. In this case both lifetime and visibility would be limited to the scope of the module. While this is suitable for local fixtures, most of the time you are likely to want to re-use them across multiple test modules.

pytest defines a specific location (conftest.py) for this purpose, which extends the visibility of contained fixtures to test modules on the same level of the hierarchy and below.

As you see, this new concept of ‘fixtures’ doesn’t only provide you the same functionality as setup/teardown in unittest, but a way more flexibility and support to reuse code across your testsuite.

Doesn’t this just look… better?

All right, the worst is over, now we’re getting to the fun part.

As I mentioned earlier, pytest syntax is simple, concise and ‘pythonic’.

Let me add two code snippets, to demonstrate improved readability. (Yes, you may experience better syntax highlighting as well 😉 )

from unittest import TestCase

class MyTestClass(TestCase):

def setUp(self):

self.myobject = generate_function()

def tearDown(self):

self.myobject.destroy()

def test_behavior1(self):

result = myfunction(self.myobject)

self.assertIsNotNone(result.field1)

self.assertEquals(result.field2,self.myobject.field1 + self.myobject.field2)

self.assertTrue(isinstance(result.field3, tuple))

vs:

import pytest

@pytest.fixture

def myobject():

obj = generate_function()

yield obj

obj.destroy()

def test_behavior(myobject):

result = myfunction(myobject)

assert result.field1 is not None

assert result.field2 == myobject.field1 + myobject.field2

assert isinstance(result.field2, tuple)

It really makes a difference for long, complex test modules…

Test parameters

Can a dream come true…? Is this happening for real…?

I’m sure you have copied a unittest test function over and over, altering input parameters, and the expected output (or errors) each time. Just like this:

from unittest import TestCase

class MyTestClass(TestCase):

def test_behavior0(self):

result = myfunction(0)

self.assertEquals(result, 1)

def test_behavior1(self):

result = myfunction(1)

self.assertEquals(result, 1)

def test_behavior2(self):

result = myfunction(2)

self.assertEquals(result, 2)

def test_behavior3(self):

result = myfunction(3)

self.assertEquals(result, 3)

Of course, these tests are easy to “compress” into one iterative loop… However unittest error reporting wasn’t most verbose, thus in case of a failure it could get difficult to figure out exactly which parameter was problematic.

pytest provides a way to compress the code, while also preserves all precious information to generate clear and detailed error reports.

import pytest

@pytest.parametrize("param,out", [

(0, 1),

(1, 1),

(2, 3),

(3, 3),

])

def test_behavior0(param, out):

result = myfunction(param)

self.assertEquals(result, out)

Failure reports

Just a quick note, having mentioned improved error reporting a few times already.

pytest failures trigger the output of multiple levels of the stack trace, including variables with values for each call. May seem a bit too verbose at first (especially when a bunch of tests are failing), but once your eyes got used to it, you’ll find it extremely useful.

(And, of course, reporting verbosity is also adjustable.)

Expected failure

Have you ever come across test failures outside of the scope of your actual task?

Or waiting for a bugfix for an underlying library, left with failing tests until?

pytest recognizes the situation when certain tests are “allowed” to fail, and should be temporarily ignored. No worries, these tests would still appear on the execution display, however distinctly marked. This helps to keep an eye, making sure that disabled tests wouldn’t “get forgotten” but re-enabled the soonest possible.

For each ignored test you can provide a ‘reason’. Typically a one-line explanation potentially with the Jira ticket number, indicating when the test should be back .

Mocking

There is an additional library called pytest-mock providing a thin wrapper around the well-known unittest.mock library. So the good (?) news is that: all about mocking is pretty much… exactly the same.

The only difference is kinda superficial. pytest-mock provides a specific fixture called mocker, which enables all functionalities of the unittest.mock library. Instead of using decorators, all should be accessed via object methods.

All in all

And we could lengthy continue the list of smart solutions, features and improvements, like

- powerful command-line interface

- static configuration (same level of granularity as on the command-line)

- useful plug-ins (coverage reporting on-the-fly, test environment for Django applications, etc.)

- “protection” mechanism for flaky tests

…but hoping that this was enough to whet your appetite, no more spoilers here.

We leave the rest of the pleasant surprises to discover for yourself 🙂