Ebury uses the Financial Information eXchange (FIX) Protocol to facilitate many of our electronic trading trends. FIX has become the language of global financial markets and is used extensively by banking trading platforms.

This non-proprietary, free and open standard is constantly being developed to support evolving business and regulatory needs, and is used by thousands of firms every day to complete millions of transactions.

Our QuickFIX Service (QFS) was built with the goal of creating a consistent way to provide different trading solutions. This is achieved by using QuickFIX. QuickFIX is an open source FIX messaging engine written in C++ and provides several implementations for different programming languages (Python, Java, Go, etc).

Overview

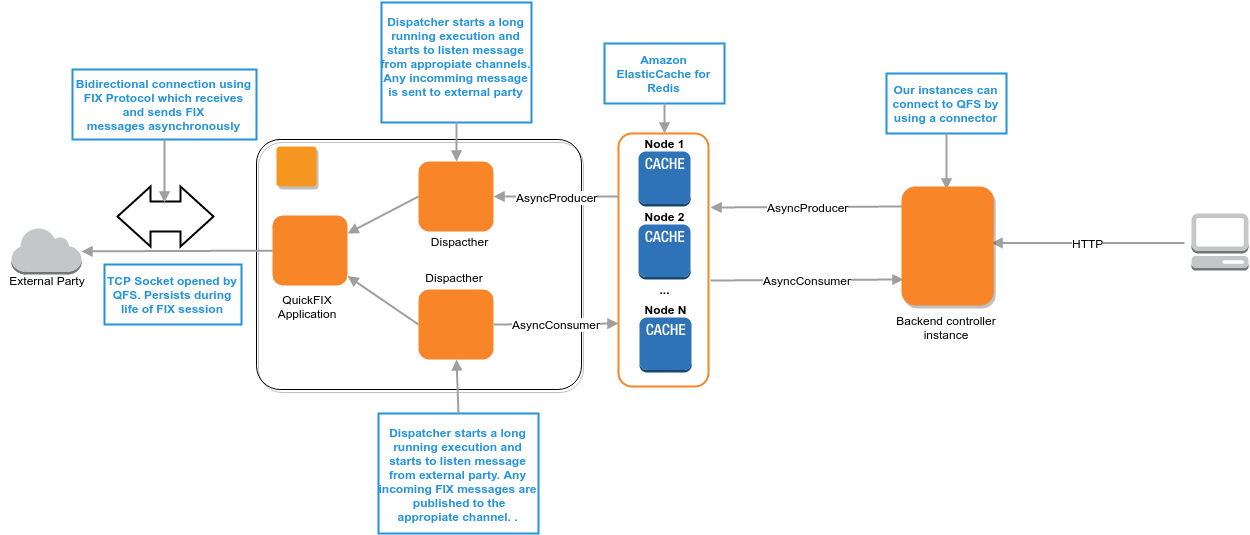

QFS is a microservice used between Ebury and other trading partners with the goal of automating communication. QuickFIX provides an application that is able to send and receive messages from a trading partner asynchronously. The application opens a TCP socket to send FIX messages to the external party. This connection persists during the life of the FIX session.

We rapidly realised that including an asynchronous service in our web services would be an issue. We thought that the publish–subscribe pattern would allow us to come up with an effective system.

Publish–subscribe is a messaging pattern where senders of messages, called publishers, do not programme the messages to be sent directly to specific receivers, called subscribers, but instead characterise published messages into channels without knowledge of which subscribers, if any, there may be.

Architecture overview

In many pub/sub systems, publishers post messages to an intermediary message broker or event bus, and subscribers register subscriptions with that broker, letting the broker perform the filtering. The broker usually performs a store and forward function to route messages from publishers to subscribers.

We looked into using Redis as broker, mostly because Redis implements the pub/sub messaging paradigm, we expected a low volume of information (our data could fit in memory) since other tools like Celery also use Redis in the same way and it was already part of our architecture.

The implementation of QFS has a relatively simplistic design:

- A client wants to make a request to an external party via FIX.

- The client sends the message A to QFS. This message is posted to the broker in the appropriate Request channel, responsible for receiving all incoming messages from clients.

- The client is subscribed to the Response channel and starts listening for the response.

- There is a dispatcher, which is only subscribed to the Request channel, listening for any message. When it gets the message A, it forwards the message to the application.

- The application sends the message A to the external party via FIX.

- The application gets the response to message A from the external party via FIX, message B.

- The application calls the dispatcher to post message B to the right Response channel.

- The client gets message B (as their response).

A few interesting properties of this pub/sub system:

- Multiple clients can publish and be subscribed to the same channels without additional overhead.

- Dispatchers are responsible for handling any incoming messages to Request channels and posting any incoming FIX messages from an external party to Response channels. When QFS receives a FIX message, the message is only posted to a Response channel with a particular cid so that only one client receives the response to the message it sent.

- Throughput/Latency: during load testing, we could get ~90-130ms latency per message consistently with 100 concurrent users (our test setup was only deployed with one broker).

Challenges and learnings

Some of the pitfalls of the current Redis Pub/Sub implementation that we encountered during that time included:

Storing messages

When a message is posted to Redis, the message is not actually stored in the database. We needed to find a way to record our messages. We chose to save the content of the messages in a Redis key.

Message reception

If a message is posted to a specific channel and nobody is listening, that message will be lost and so we can’t guarantee messages will be consumed. To avoid this, we implemented a retry operation to send the message until one subscriptor listens to it within a sensible period of time.

Duplicate messages

In a clustered environment where multiple instances of our broker component are running at the same time, each instance could send the same message more than once, so we set an atomic short-lived lock when receiving messages to discard any potential duplicates.

Are we done?

We still have a lot of work to do on our event-driven microservices architecture. We will continue to explore ways to efficiently integrate new components in our architecture.

Future improvements might involve using different tools (such as Apache Kafka) or taking a different approach (like creating a webhook).